Autonomous vehicle sensors require the same rigorous testing and validation as the car itself, and one simulation platform is up to the task.

Global tier-1 supplier Continental and software-defined lidar maker AEye spoken this week at NVIDIA GTC that they will migrate their intelligent lidar sensor model into NVIDIA DRIVE Sim. The companies are the latest to join the wide-stretching ecosystem of sensor makers using NVIDIA’s end-to-end, cloud-based simulation platform for technology development.

Continental offers a full suite of cameras, radars and ultrasonic sensors, as well as its recently launched short-range wink lidar, some of which are incorporated into the NVIDIA Hyperion autonomous-vehicle minutiae platform.

Last year, Continental and AEye announced a collaboration in which the tier-1 supplier would use the lidar maker’s software-defined tracery to produce a long-range sensor. Now, the companies are contributing this sensor model to DRIVE Sim, helping to bring their vision to the industry.

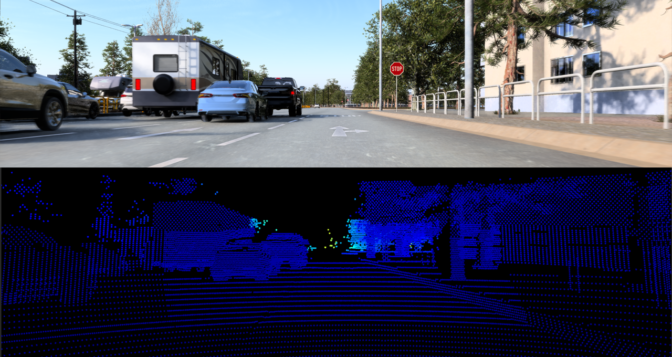

DRIVE Sim is built on the NVIDIA Omniverse platform for connecting and towers custom 3D pipelines, providing physically based digital twin environments to develop and validate voluntary vehicles. DRIVE Sim is unshut and modular — users can create their own extensions or segregate from a rich library of sensor plugins from ecosystem partners.

In wing to providing sensor models, partners use the platform to validate their own sensor architectures.

By joining this rich polity of DRIVE Sim users, Continental and AEye can now rapidly simulate whet cases in varying environments to test and validate lidar performance.

A Lidar for All Seasons

AEye and Continental are creating HRL 131, a high-performance, long-range lidar for both passenger cars and commercial vehicles that’s software configurable and can transmute to various driving environments.

The lidar incorporates dynamic performance modes where the laser scan pattern adapts for any streamlined driving application, including highway driving or dumbo urban environments in all weather conditions, including uncontrived sun, night, rain, snow, fog, pebbles and smoke. It features a range of increasingly than 300 meters for detecting vehicles and 200 meters for detecting pedestrians, and is slated for mass production in 2024.

With DRIVE Sim, developers can recreate obstacles with their word-for-word physical properties and place them in ramified highway environments. They can determine which lidar performance modes are suitable for the chosen using based on uncertainties experienced in a particular scenario.

Once identified and tuned, performance modes can be zingy on the fly using external cues such as speed, location or plane vehicle pitch, which can transpiration with loading conditions, tire-pressure variations and suspension modes.

The worthiness to simulate performance characteristics of a software-defined lidar model adds plane greater flexibility to DRIVE Sim, remoter progressive robust voluntary vehicle development.

‘’With the scalability and verism of NVIDIA DRIVE Sim, we’re worldly-wise to validate our long-range lidar technology efficiently,’’ said Gunnar Juergens, throne of product line, lidar, at Continental. ‘’It’s a robust tool for the industry to train, test and validate unscratched self-driving solutions’’

The post Continental and AEye Join NVIDIA DRIVE Sim Sensor Ecosystem, Providing Rich Capabilities for AV Development appeared first on NVIDIA Blog.